With the closing of DEF CON 25 and our last year of running Capture The Flag, I figured a post about what it took to create cLEMENCy was in order. This is a very long write-up detailing what I went through while developing cLEMENCy and ignores all the effort that occurred on top of it to create other challenges for DEF CON CTF 24 and 25. Hopefully this post shows the amount of dedication it can take to run DEF CON CTF. When I joined Legitimate Business Syndicate in January 2014, I made it known to the team that for our last year I wanted to do a fully custom architecture. As luck would have it, the Cyber Grand Challenge (CGC) happened in 2016 which allowed me to take a backseat to most of the CTF challenges and focus my evenings on the emulator and tool development.

My first processor document was created on August 11, 2014. Highlights were:

- Stack growing upwards

- Little Endian

- 25 instruction groups

- 32 registers that could be used as integer or floating point

- 8 interrupts

- DMA transfer involving the ID of a device you want to talk to in a register along with all areas that were potentially relevant

- Memory protections

There were questions about whether or not I should add in logic to allow threading and if the firmware was static or a custom format that allowed modules. A month later, Gyno dropped in notes about

Hexagon DSP,

Mill, and the

Cell architecture. I had tossed around other ideas in my head in relation to Harvard architectures and the idea of swapping out the opcode lookup table as opcodes execute, tying a unique table to each opcode resulting in code obfuscation.

2015

I began laying out the basics of the opcodes and started writing actual emulator code at the end of November 2015, almost a full 2 years after joining the team while pondering design ideas during that time. The development started shortly after my break from CTF finals that year and after a number of rapid updates and changes to the architecture idea document. Although the original architecture document called it “Lightning CPU” I officially named the project “DMC” (Defcon Middle-endian Computer) in December, I own a Delorean and wanted to tie in a reference to the Delorean Motor Company.

A large amount of development on the emulator occurred at the end of December due to having a 2 week vacation. By the end of December, 23 files, 1,867 lines of code, and 344 lines of comments had been put together for the emulator. This obviously does not count refactoring and various reworking that occurred at the early stages, however a total of 2,211 lines in around 30 days was not bad (almost 74 lines a day). Things were adjusted and more refined for the specification during this time while Thing2 and I chatted about middle-endian. Ideas were still floating around about replicating the SPARC sliding register window and allowing registers to combine to expand the number of bits used for math.

I created a personal goal of keeping things simplistic enough to allow teams to learn the architecture in less than a week with the idea of just handing the teams a manual a week before the competition. The end of 2015 was spent with random ideas and tweaks being done to align it to be RISC-like and refining the running document to remove complexities to help tailor the architecture to a setup that should be easy and quick to learn.

In order for the team to be able to develop challenges I needed to have a full toolset for them to work with. Work was started in January 2016 to create a LLVM configuration for the clang compiler after giving up on adapting GCC to middle endian. The complexity of modifying LLVM resulted in looking at a number of simple C compilers including TCC before stumbling across NeatCC (NCC) developed by Ali Gholami Rudi. The benefits of it were that the core file per architecture was simplistic, its author had a lightweight libc that compiled in it, along with a linker while supporting the ELF object format to link together multiple C files.

2016

The first few months of 2016 involved minor modifications to NeatCC to get it ready for creating firmwares for DMC along with creating a python script that would auto-generate a Sqlite3 database with information about opcode layouts. The database had been planned as it would allow auto-generating a header file of instruction data for a C disassembler that the emulator would eventually have. The database also would drive the planned python assembler and disassembler to avoid massive parsing code and also be used for creating the documentation.

By mid-April 2016, NCC, the Neat linker (NLD), and the emulator were usable. I also started testing between the tools and emulator. During this time period the python script to parse the Sqlite3 database and generate the initial HTML documentation was created along with the addition of more instructions. NCC did not have the ability to have embedded assembly so an external assembler was created, Lightning Assembler (LAS). June is when the architecture was re-named “cLEMENCy” (LEgitbs Middle ENdian Computer) and all code updated to reflect it. I wasn’t completely happy with the DMC name and enjoyed that clemency means mercy, which I was showing by not going all-out in complexity.

It was during DEF CON 24 CTF Finals that things changed. Thing2 and I were talking that Friday about how to break tools with the architecture when I had an epiphany! I asked him if making all bytes 9 bits would break everything, and he could only smile at the idea. I ran it past the rest of the LegitBS team and the consensus was that, if I could prove it would work, then why not? While all the competing teams worked on finals that year, I was creating a proof-of-concept. By the end of the competition, I was able to prove that I could not only convert between 8- and 9-bit formats easily, but that I could make it work with the tooling and setup I had previously developed.

By the end of September, the instruction format, assembler, disassembler, NLD, and NCC were converted over to the new 9-bit byte layout. In the process, I came across a number of parsing issues in NCC that were not showing up in the latest release. I made a new pull of NeatCC, NeatLD, and NeatLibc in the beginning of October, spending 2 weeks dealing with a massive merge and rewrite of the code to make it compatible with the new setup.

Mid-October 2016 was the beginning of modifications to the emulator to adapt it to the 27-bit, 9-bits-per-byte setup - another 2-week process. While doing the rewrite, debugger and disassembler functionality started to be added to the emulator allowing for simple and expected functionality, dumping registers, breakpoints, and single stepping to name a few.

The speed of the emulator was a bit slow, though, so instead of adding floating point logic to NCC, I chose to remove the floating point code. This eliminated the masking, compare, and branch on most of the opcodes while requiring that I add in functionality to indicate if the processor supported floating point to avoid documentation changes. This improved the speed of the emulator to around 8 million instructions/sec on my old laptop and I deemed it good enough as the infrastructure would be a bit beefier. It appeared that the biggest speed hit was the constant shifting and masking required to handle 9 bit byte access.

November 2016 saw modifications and assembly file additions to NeatLibc specific for cLEMENCy and the actual creation of full firmware images across the tools that the emulator would run outside of the simple assembly tests that had been done after the 9 bit conversion. I had to create a custom memory allocator in Neatlibc because the original one relied on mmap() and the firmware had fixed memory to work with. LAS was modified to be capable of writing ELF objects that were compatible so the assembly could be linked in.

The rest of November and December were spent squashing bugs, adding in enhancements, and writing assembly files for things like millisleep (similar to nanosleep). During this time the addition of code for inverting the stack by recompiling ncc to ncc-inv was also added. The fun of tracking bugs at this point was determining if the emulator, disassembler, assembler, linker, or compiler was the culprit. Some bugs were issues of improper masking in the emulator, some were the C compiler kicking out the wrong values due to improper bit combining, while others were from the linker incorrectly writing offsets or incorrectly calculating where to modify data. While trying to validate issues, bugs would be found in edge cases in the assembler and disassembler.

2017

By the middle January 2017 enough bugs had been squashed that Perplexity, my Finals challenge that I’m sad to say was never finished, was being used to test functionality fairly ruggedly. Perplexity’s goal was to make people question if I had created a C++ compiler for the architecture on top of everything else that was developed. It was a C binary with structures setup to allow vtable configurations while NCC was modified to allow two colons side by side in a function name so that everything looked like C++ in development. In January 2017 the plan was to release just the manual of the architecture to the teams although there was a debate of how early the teams should have the manual. There was also a question of whether or not all challenges would be written in it due to the LegitBS team not using any of the tools yet.

By the end of January 2017, the emulator, assembler, backend file for the C compiler, and custom files for NeatLibc totaled 54 files and 13k

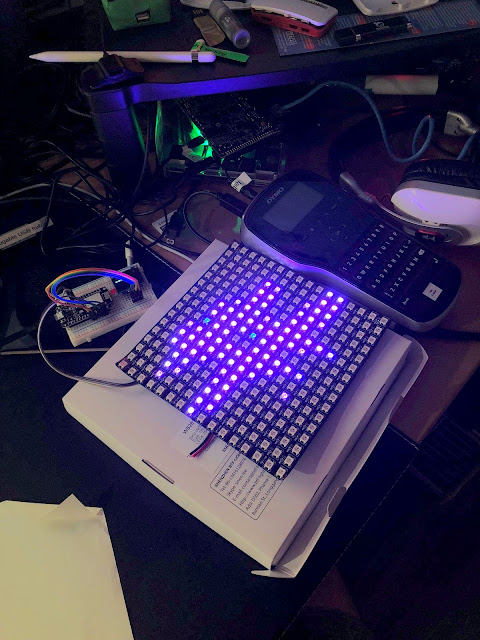

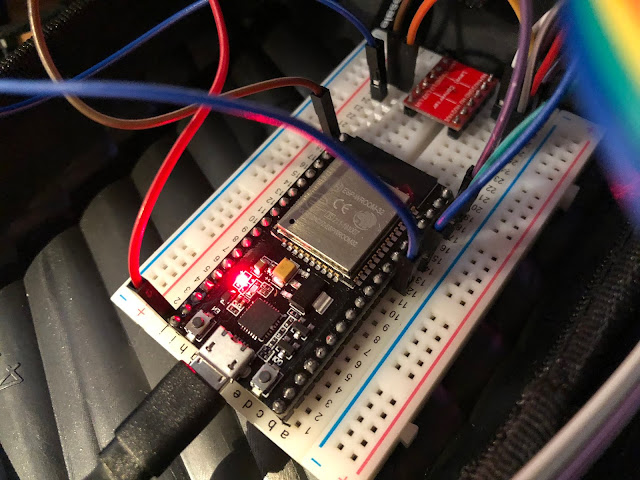

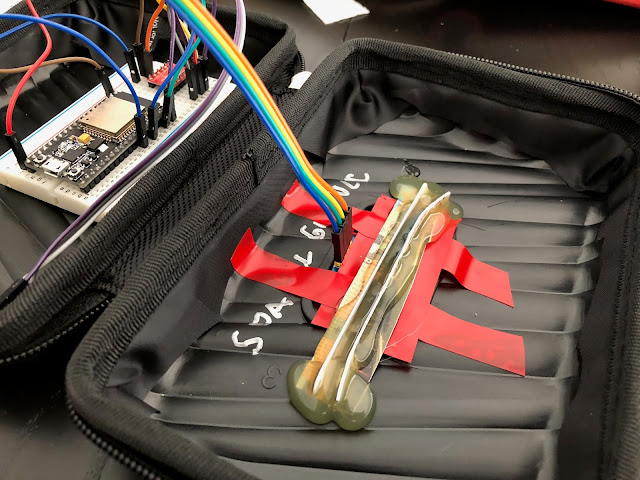

total lines of pure code not counting comments. Sirgoon had begun working on a physical version of the processor on a FPGA, however due to personal things this was scrapped. If anyone ever makes this into hardware I would love a copy of it.

The tools and emulator were stable enough in my testing that I had nothing to fix and just needed to write my finals challenge until others began using it and reporting bugs. Work progressed on Perplexity with random additions to the debugging abilities of cLEMENCy. At this point there was still no plan to release any of this to the teams outside of the manual that had not been modified since end of December 2016.

Come April, the DBRK instruction was added to help pinpoint parts of the code after being recompiled. Although the map file existed, I did not have line specific information so identifying specific areas after a recompile was faster after adding DBRK. This also allowed me to quickly test theories about ways to land Perplexity for some of the bugs I had already added.

We decided to rent a house for our last time running quals. During this time I continued working on Perplexity, and showing the team the tools was very helpful. Since we were face to face, they had faster access to questions and issues. It was also requested during this time that shared memory be added, and as a bonus I added the NVRAM memory. There were plans for a challenge to use the shared memory, but I’m not aware of it actually occurring. During quals we determined that one connection per team per service would limit the load on the boxes and no planned challenges required multiple connections. This limitation was implemented and tested during quals.

After quals, Vito put in effort to start getting a physical manual created, Selir worked on porting old challenges so we could benchmark how much processing power was required with all services being attacked, and the rest of the team began using the tools to create and port challenges they had been working on. This became a pressure point for me due to a bunch of random directions on the documentation, tools, and architecture and of course bugs began to show up.

I created a separate slack channel to track just emulator changes that the team could be aware of and people could report issues in. It was not uncommon for a single day to have 10+ random small changes and bug fixes done to the tools and emulator with the weekend being up to 20+ different things. As we moved closer to Finals, the rate of updates and bug fixes would increase.

Just to list a few random bugs fixed in one weekend as a taste of things that were fixed:

- Some of the branch compares had improper checks resulting in invalid if statements

- Memory protections on the flag area needed to be enforced

- Millisleep and strcpy having edge cases to correct

- Exiting debug in certain situations resulted in an unusable terminal

- Timers not firing properly

- Signed immediate issues

Normally when challenges for Finals were created, a number of bugs would be added to the service with proof of concepts showing at least gaining control of PC and ability to continue. Due to the new architecture I made a request that the team accepted and agreed on; any bug in a challenge must be proven to be able to return the flag. This resulted in the creation of a rop-search tool to help prove that bugs could be landed in the challenges and an issue was discovered, our limited code size and lack of threading meant it was near impossible to do a stack pivot if you only control a register and PC.

Gyno came up with the idea of a page of memory that would have gadgets in it. Vito and I were about ready to get the physical manuals printed but I had not figured out exact details for this new memory area. I decided to leave the memory area out of the official documentation with the reason that a physical processor wouldn’t have this memory page, it was just from the emulator and being in the DMA area could be seen as a separate device. I created a script to auto-generate a random character text version of the LegitBS logo combined with text to appear similar to a NFO from a warez leak. The concept was to leave a few hints: the help menu saying to enjoy the NFO section, and an ELF section named NFO with the normal ASCII text. Reading it would tell them where the NFO was loaded in memory and the random character setup was to mask embedded ROP gadgets for pivoting from any register to stack.

Gyno came up with the idea of a page of memory that would have gadgets in it. Vito and I were about ready to get the physical manuals printed but I had not figured out exact details for this new memory area. I decided to leave the memory area out of the official documentation with the reason that a physical processor wouldn’t have this memory page, it was just from the emulator and being in the DMA area could be seen as a separate device. I created a script to auto-generate a random character text version of the LegitBS logo combined with text to appear similar to a NFO from a warez leak. The concept was to leave a few hints: the help menu saying to enjoy the NFO section, and an ELF section named NFO with the normal ASCII text. Reading it would tell them where the NFO was loaded in memory and the random character setup was to mask embedded ROP gadgets for pivoting from any register to stack.

Near the end of June 2017 we decided that the teams would be given a copy of the emulator along with the built-in debugger and disassembler, and the architecture manual 24 hours in advance. We decided this after watching lunixbochs (on the usercorn team) implement the NDH CPU into an emulator in a few hours. By releasing the emulator and built-in tools it would guarantee at minimum that everyone had the tools required to compete and avoided time being wasted at the start of the game to get tools created. I had not planned on teams having the emulator so Perplexity development was shelved. At this point Perplexity was 43 files and 2,808 lines of code, not counting comments. I was sad to shelve it but I needed to make sure the emulator was ready for teams. This involved me creating 3 versions of the emulator when it compiled, the production version with seccomp and stripped out debugging, our debug build, and the team debug build that had the instruction and register state history stripped out.

Near the end of the last week prior to Finals things appeared to be going smoothly. The emulator was chugging away at 7M instructions/sec on my laptop and we were not overloading our infrastructure that was being tested with multiple sample binaries. Selir had configured random connections and data for the sample binaries between all fake teams to help stress test and watch CPU spikes. I made a painful discovery; I found that I made a mistake in a select statement.

Can you spot the mistype? A simple 1 to 0 resulted in my poor laptop cranking out 40M instructions/sec easily, an almost 6x speed up. The speed boost was nice but I had to keep from kicking myself over tossing floating-point early in the process over such a simple mistype. We were close enough to game day that adding floating point back in was risky and wasn't needed as challenges had already been written to work around and avoid it's usage.

Can you spot the mistype? A simple 1 to 0 resulted in my poor laptop cranking out 40M instructions/sec easily, an almost 6x speed up. The speed boost was nice but I had to keep from kicking myself over tossing floating-point early in the process over such a simple mistype. We were close enough to game day that adding floating point back in was risky and wasn't needed as challenges had already been written to work around and avoid it's usage.

Game Day

I arrived to Vegas on Tuesday, July 25. Previous years I always had a challenge in finals and was doing last minute changes up till game start, adding in bugs, testing functionality, and enhancing the poller script. This year was different; I had no challenge, just the architecture. The team was spread out between multiple hotel rooms and a lot of time I was just sitting around. I decided to toss my laptop in a backpack and just wander Caesars. The team knew that I had my phone and a ping on Hangouts or Slack with a room number would result in me showing up. I had only ever gone to DEF CON for CTF, which had been 6 years straight and always busy. I had competed for 3 years and helped run for 3 years already, and having my 7th year to just wander was odd and surreal. I wandered around while the team was busy finalizing their challenges but I had nothing to really accomplish until an issue was discovered or someone needed help. On Thursday we released everything and were alerted to a couple mistypes in the manual. The delay on an answer and fix was because I was off in the swag line and needed to get back to one of the rooms as I refused to use my WiFi. Thursday is also when a clang bug was discovered; using the -O3 optimization was actually causing an edge case in the networking code. After a couple hours of testing and watching the bug appear and disappear purely based on adding debug logic, I recompiled with -O2 and everything worked as it should have.

Friday, I hated this day with a passion. From everyone else’s perspective Friday kicked off well, challenges appeared to go off without issue, HITCON landed 3 different first bloods, everything appeared to be going like clockwork. Behind the scenes I couldn’t stop shaking before game start and refused to drink anything due to being a light-weight. I needed to be able to think straight if a last minute fix was needed due to things going to hell. We had done testing, we had a version of the emulator running that no teams had to help avoid a breakout if one existed, but if something broke it would likely be on my head, I was stressed.

Mid-day Friday a bug was discovered in the custom malloc, it wasn’t re-handing out blocks and once fixed, 2 high memory usage and unreleased services would randomly fault. Sirgoon and I spent all of Friday afternoon going through the allocator. By the end of Friday I was done and actually feeling sick, probably from the stress I put on myself. We had fixed one of the services but the other continued to act up for unknown reasons. Sirgoon and Selir saved me, Saturday morning I found out they stayed up after Friday’s competition and hand verified the allocator to each other and found no faults with it outside of what was fixed Friday afternoon. They then in turn started looking at the challenge and found a null deref. Because of the offset it would wrap to high memory to get the allocated block information. This high memory was Read/Write although writes were ignored resulting in no crashes but invalid information leaking into the memory block chain. The rest of the weekend was far more relaxing and a huge weight off my shoulders.

Closing

Back in 2014 I had two teams approach me at Finals swearing up and down we had a custom architecture that year before game start as they both knew my background. I pointed out the difficulty in creating something custom, the tooling and testing required and tried to give the idea that it was hard. When it was announced at closing ceremonies in 2016 that we were doing a custom architecture the room was quiet for a moment, it was eerie. A number of the top teams knew me as the one that created the hardest challenges and also knew that if a custom architecture was to be done I would be involved. Although teams did not know I was the sole creator of the architecture until shortly before the contest, I heard rumors that teams were afraid of what would be created due to my involvement. I’m actually proud that I struck fear into teams. Balancing ease of learning with complexity and something new is not easy. I spent a lot of time tweaking ideas and judging if I thought the teams would adapt well enough before beginning to write any actual code. I could have done far worse but am glad to see my years of effort paid off and that it was thoroughly enjoyed.

I have created a number of custom architectures during my years of development and I hope that my challenges and cLEMENCy will leave a mark on the CTF scene. I thoroughly enjoyed creating my masterpieces and also learned that some people of my team and the competing teams think I am insane. I am going to have Perplexity with all of my notes pushed to the LegitBS repo when finals challenges are pushed. It was never finished but shows what could have been. Thank you not only to Legitimate Business Syndicate for asking me to join the team but for also supporting my ideas and plans. I also have to thank each and every player that took on my challenges over the years even if they didn’t solve it. I hope I helped others strive to learn new things and push their knowledge limits.

-Lightning

Gyno came up with the idea of a page of memory that would have gadgets in it. Vito and I were about ready to get the physical manuals printed but I had not figured out exact details for this new memory area. I decided to leave the memory area out of the official documentation with the reason that a physical processor wouldn’t have this memory page, it was just from the emulator and being in the DMA area could be seen as a separate device. I created a script to auto-generate a random character text version of the LegitBS logo combined with text to appear similar to a NFO from a warez leak. The concept was to leave a few hints: the help menu saying to enjoy the NFO section, and an ELF section named NFO with the normal ASCII text. Reading it would tell them where the NFO was loaded in memory and the random character setup was to mask embedded ROP gadgets for pivoting from any register to stack.

Gyno came up with the idea of a page of memory that would have gadgets in it. Vito and I were about ready to get the physical manuals printed but I had not figured out exact details for this new memory area. I decided to leave the memory area out of the official documentation with the reason that a physical processor wouldn’t have this memory page, it was just from the emulator and being in the DMA area could be seen as a separate device. I created a script to auto-generate a random character text version of the LegitBS logo combined with text to appear similar to a NFO from a warez leak. The concept was to leave a few hints: the help menu saying to enjoy the NFO section, and an ELF section named NFO with the normal ASCII text. Reading it would tell them where the NFO was loaded in memory and the random character setup was to mask embedded ROP gadgets for pivoting from any register to stack.

Can you spot the mistype? A simple 1 to 0 resulted in my poor laptop cranking out 40M instructions/sec easily, an almost 6x speed up. The speed boost was nice but I had to keep from kicking myself over tossing floating-point early in the process over such a simple mistype. We were close enough to game day that adding floating point back in was risky and wasn't needed as challenges had already been written to work around and avoid it's usage.

Can you spot the mistype? A simple 1 to 0 resulted in my poor laptop cranking out 40M instructions/sec easily, an almost 6x speed up. The speed boost was nice but I had to keep from kicking myself over tossing floating-point early in the process over such a simple mistype. We were close enough to game day that adding floating point back in was risky and wasn't needed as challenges had already been written to work around and avoid it's usage.